2023 HPCC Systems Internship Summary

During my internship at HPCC Systems in 2023, I had the incredible opportunity to work on a dynamic project that pushed the boundaries of my technical skills and knowledge. In this post, I will summarize the key aspects and achievements of my internship project, highlighting the challenges I faced, the solutions I implemented, and the valuable lessons I learned along the way.

Background and Motivation

HPCC Systems: The HPCC Systems platform is a remarkably powerful system for solving big data challenges.

Generalized Neural Network (GNN) bundle: This bundle provides a generalized ECL interface to Keras over TensorFlow.

The GNN bundle was originally developed against the TensorFlow1.5 interface. We currently support TensorFlow2.0 using a TensorFlow compatibility mode. We want to achieve the following goals in this project:

- Adapt GNN to directly use the TensorFlow 2.0 interface: Our primary objective is to migrate the GNN bundle to directly integrate with the TensorFlow 2.0 interface, leveraging its improved features and capabilities for enhanced performance and efficiency.

- Provide effective support for GPUs: We intend to ensure effective support for GPUs, allowing users to harness the power of graphical processing units for accelerated neural network computations and faster training times.

- Full support for pre-trained models: The upgraded GNN bundle will be designed to fully support and accommodate the latest and most advanced neural network models available. This will enable users to leverage state-of-the-art architectures and achieve better results for their specific tasks.

- Save/Load trained model for later reuse: 1.We want users to be able to save/load their trained models for later use.

Technical details

Upgrade functions to TensorFlow2.x

We upgraded some functions to native TensorFlow2.x without changing the API:

- DefineModel: Define a Keras / Tensorflow model using Keras sytax.

- DefineFuncModel: Construct a Keras Functional Model.

These functions play a crucial role in defining the neural network

architecture. In the upgraded GNN bundle, both functions have been

enhanced to utilize the TensorFlow 2.x API. DefineModel

follows a sequential approach, where the layers are stacked in a strict

order. On the other hand, DefineFuncModel offers a more

flexible definition, allowing users to specify not only the shape of

each layer but also the connections between different layers. These

upgraded functions now support loading state-of-the-art models, which

provide users with more advanced and powerful neural network

architectures for their tasks.

- Fit: Train the model using training data.

The Fit function is used for training the neural

network. It allows users to set parameters such as batch size and epoch

number, providing greater control over the training process. With the

upgrade to TensorFlow 2.x, the training process becomes more efficient

and streamlined.

EvaluateMod: Evaluate the trained model using some test metrics.

Predict: Predict the results using the trained model.

After training the model using the Fit function, the

EvaluateMod function helps users assess the model's

performance by providing metrics on how well it has been trained. The

Predict function allows users to apply the trained neural

network for tasks like recognition and classification.

GetWeights: Get the weights currently associated with the model.

SetWeights: Set the weights of the model from the results of

GetWeights.

These functions are essential for saving and loading models. Users

can use GetWeights to obtain the model's weights, and

SetWeights to set the model's weights, enabling easy model

persistence. This way, trained models can be saved and loaded,

facilitating model continuation for further training or deployment to

customers.

Create new functions

To enhance user experience and provide greater convenience, we have introduced several new functions in the GNN bundle.

- GetModel: Get the structure and weights of the

model. This function allows users to access comprehensive details of the

model, including its structure and weights. By using

GetModel, users can gain a thorough understanding of the neural network architecture. - SetModel: Create a Keras model from previously saved DATASET.

Additionally, we have incorporated some developer-oriented functions tailored for debugging purposes:

- GetSummary: Get the summary of the Keras model. The

GetSummaryfunction offers developers access to the underlying TensorFlow information. This allows developers to obtain essential insights into the inner workings of the GNN bundle, aiding them in identifying and resolving any issues that may arise. - IsGPUAvailable: Tests whether the GPU is available.

The

IsGPUAvailablefunction serves as a practical tool for developers to determine whether a GPU (Graphics Processing Unit) is available for use. This information is crucial when optimizing the GNN bundle's performance and ensuring efficient hardware utilization.

By providing these user-friendly and developer-oriented functions, we strive to make the GNN bundle more accessible, efficient, and robust, catering to the needs of both users and developers alike.

Support Pre-trained Model

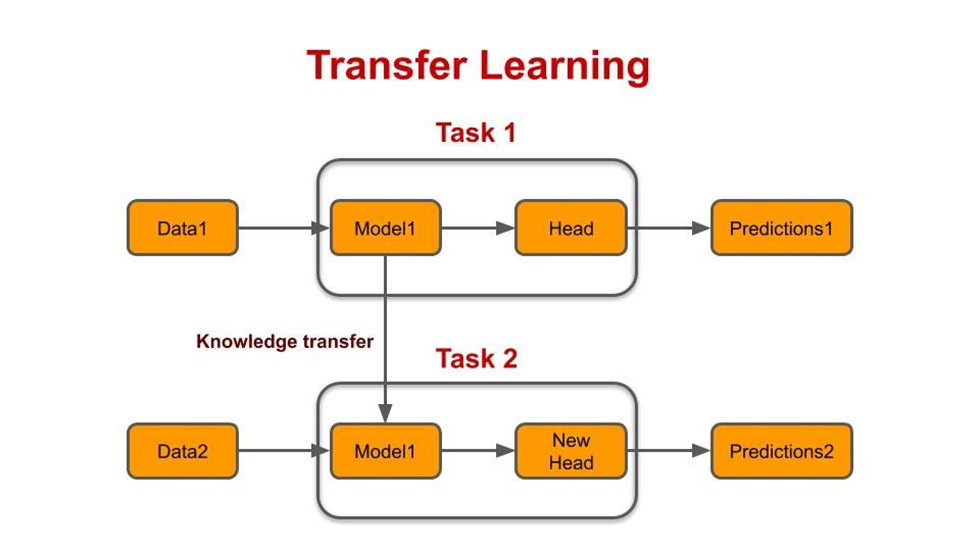

The recently upgraded GNN bundle introduces support for numerous state-of-the-art models, which have been defined and trained to serve specific tasks. With over 70 pre-trained models available, users can readily utilize them for their projects. Additionally, these pre-trained models can act as the foundation for user-specific models, allowing customization by modifying the input and output and expanding the neural network, a technique commonly known as transfer learning.

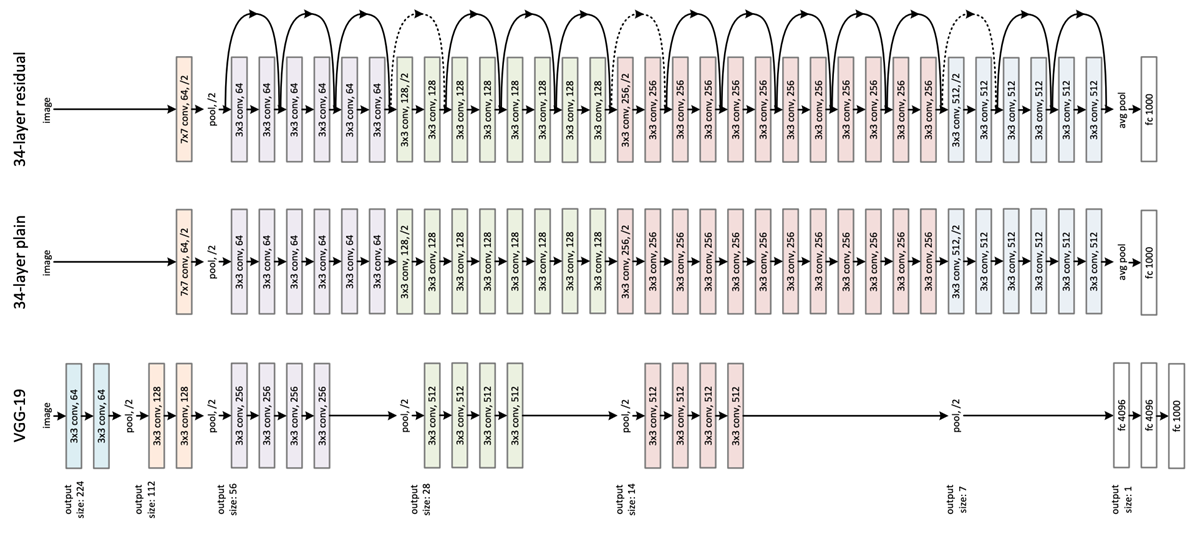

One notable example among the supported pre-trained models is ResNet50. ResNet50 is a deep convolutional neural network comprising 50 layers. By loading the pre-trained version of this network, which was trained on an extensive dataset of more than a million images from the ImageNet database, users gain access to a powerful image classifier. The pre-trained neural network is capable of categorizing images into 1000 object categories, including items such as keyboards, mice, pencils, as well as various animals. As a result of its extensive training, this neural network has acquired a vast knowledge of feature representations for a diverse range of images.

Transfer Learning

The enhanced GNN bundle offers the ability to load numerous pre-trained models, opening up possibilities for various impressive applications. For instance, consider ResNet-50, a model commonly used to classify 1000 different objects. However, if you wish to employ it specifically for classifying vegetables or woolen cloth, you can achieve this by leveraging the pre-trained ResNet-50 as a starting point for training. This involves modifying the input and output components of the model while preserving the existing ResNet-50 weights, rather than initializing the weights randomly. Such an approach allows you to tailor the model to your specific classification tasks effectively.

Also, you can use the pre-trained model as a part of your neural network architecture, you can add layers to it.

Performance Testing

TL; DR:

- When using the same GPU, the upgraded GNN outperforms the previous version with TensorFlow 1.x, achieving a remarkable 1.25 times performance improvement.

- The upgraded GNN takes advantage of GPU acceleration, resulting in a substantial performance boost. When compared to using only CPU, the performance improvement is an impressive 3.71 times.

FAQ

Q: Does it support video processing?

A: Yes!

Q: Did it change the existing API.

A: No. This means that the previous program can still be used without modification.

Conclusion

A high point during my internship was successfully contributing to a complex coding task, receiving recognition from the team for my innovative approach. This achievement bolstered my confidence and affirmed my passion for software development.

While on this journey, I encountered a low point when I faced an unforeseen roadblock that temporarily hindered project progress. For instance, when I come across unfamiliar grammar challenges within the realm of the ECL programming language, I often find myself facing significant obstacles. I am truly grateful for the assistance provided by Lili and Roger, as their guidance proved instrumental in successfully navigating through these complexities. This setback taught me resilience and the importance of seeking guidance when confronted with unfamiliar challenges.

The main takeaways from this internship include a heightened appreciation for collaborative teamwork. These lessons will undoubtedly shape my future endeavors in the field of technology.

References

The following article records the problems and solutions I encountered in this project.